We have been focusing on developing mechanisms, drives, and control technology related to grippers. One approach was to extensively test the 3-finger gripper SDH-2 purchased from Schunk (Germany) and provide control techniques to intuitively operate this device. We have proposed various methods and tested several control interfaces. Robot Operating System (ROS) was used in all of our research within the RobREx project - we have even prepared special web-based software to familiarize our students to this middleware. We have exploited simulation and control capabilities of ROS. First, the model of the gripper was created using Gazebo, rviz and V-rep, then the external interfaces were connected to ROS and the various control methods were tested in simulation environment before going to real gripper. These tests are presented in videos: “Demo of sensor glove to dexterous gripper mapping”, “Mapping_without_mixing_difficult_control”, “Hybrid control of Schunk Dexterous Gripper 2014”, “LeapMotion_controlled_manipulator_model”. Then the real gripper was connected to ROS so that the sensor glove or Leap Motion can control a mechanical unit, as shown in video: “3finger_gripper_LeapMotion_controlled_WMV V9”.

We have tested several interfaces that arebased on integrated vision systems such as Kinect andLeap Motion. Their APIs allow for precise tracking ofthe position and orientation of the human’s hand orother objects, as shown in “LeapMotion_controls_visual_gripper”.

We have focused our research on tools to support an intuitive and precise approaching and gripping anobject. Visual gripper is a software tool for controlling position,orientation and gripper opening-closing. Operatoruses a mockup gripper that is tracked by the visionsystem to control a real gripper placed on a manipulator.

In the second approach our SDH-2 gripper control system uses two sourcesof information: readings from flexion sensorsmounted in the sensor glove and data from thevision system. Rotation angles of gripper’s fingersare controlled by flexion of operator’s fingers whilethe position and orientation of entire Schunk Handand the mode of operation can be changed based onvision sensor.

The SDH has 7 degrees of freedom, therefore, humanhand has enough agility to control it throughsome movements, but its kinematics is different thanhuman’s hand (except the number of the fingers, theyhave different flexion ranges, and human cannot rotatefingers around the base of the palm). First of ourapproaches assumed arbitrary choice of fingers to furtherdirectly control gripper’s movement (video: Mapping_without_mixing_difficult_control). In oursecond approach, we propose the mapping basedon recognizing the user’s intention. It is still based on adetection of grip types, but then the obtained informationis blended to a single behavior using some characteristicfeatures rather than the strict classification (video: Demo of sensor glove to dexterous gripper mapping.

We further propose to use gestural interactions as a way toadditionally control gripper. Gestures, provide a wayto pass symbolic commands to the system throughmovement and pose of hands. Using specific movementto control machines has become very popularwith advent of smartphones and tablets with touchscreens- using fingers the user can not only click butalso swipe, drag, pinch or rotate to generate specificbehaviors of the computer system (video: Hybrid control of Schunk Dexterous Gripper 2014).

After extended tests of tactile sensors of SDH-2 we have synthesized an admittance control algorithm using information from tactile sensors to grip objects with presumed stiffness as shown in videos which names start with: 3finger_gripper_impedance_control.

To provide haptic feedback we have designed and developed prototype of Haptic tubes mounted on the sensor glove. Tubes exploit the jamming phenomena, as shown in video: Haptic_jamming_tube.

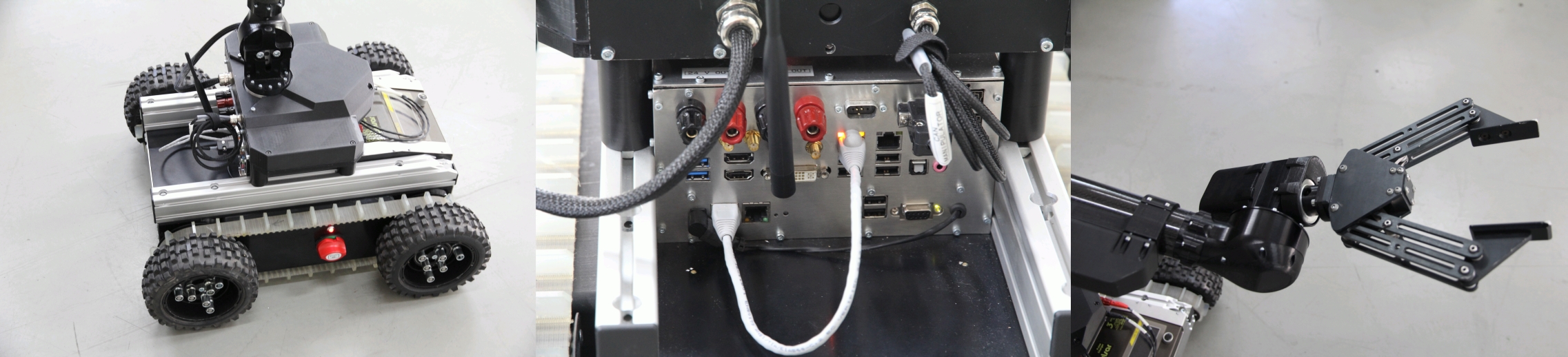

The other technology we have developed is a 2-finger gripper with tendons, electric drives and passive elasticity provided by springs. The entire gripper has been produced based on a 3D print technique (ABS material). With less than 0,5 kg mass the gripper is able to grip and lift an object weighing over 1 kg. With a spring-based tension mechanism the gripper is able to embrace and safely grip different objects as shown in videos with names starting with 2finger_gripper.